Ai model

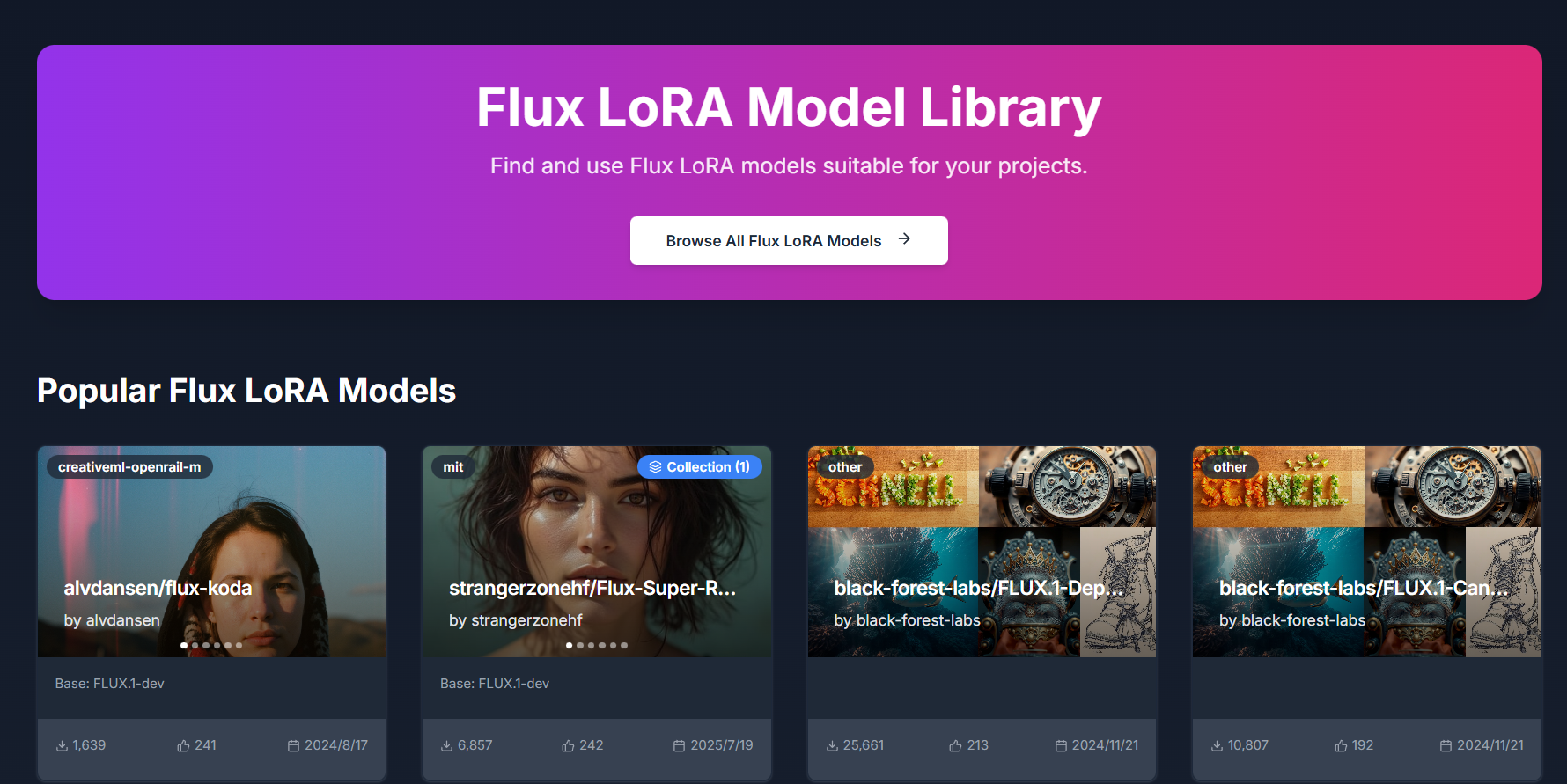

getaitool.in/c/ai-model# AI Model – Foundation of Artificial Intelligence Systems An **AI model** is a computational system designed to **learn patterns from data and make predictions, decisions, or generate content** without explicit programming. AI models form the **core of artificial intelligence**, powering applications such as chatbots, image generators, recommendation systems, voice assistants, and autonomous agents. AI models are trained using **machine learning and deep learning techniques**, enabling them to improve performance over time as they process more data. ## What Is an AI Model? An AI model is created by training algorithms on large datasets so the system can recognize relationships, patterns, and structures within the data. Once trained, the model can analyze new inputs and produce intelligent outputs such as text, images, audio, video, or predictions. AI models can be pretrained foundation models or custom-trained models for specific tasks. --- ## Types of AI Models ### Machine Learning Models These models are used for predictions and classifications using statistical techniques such as linear regression, decision trees, and random forests. ### Deep Learning Models Based on neural networks, these models handle complex tasks like image recognition, speech processing, and natural language understanding. ### Generative AI Models These models create new content including text, images, audio, and video. Large language models are a common example. ### Computer Vision Models Used to analyze images and videos for tasks such as object detection, facial recognition, and visual classification. ### Speech and Audio Models Enable speech recognition, text-to-speech, and audio processing in voice assistants and media tools. ### Multimodal AI Models Process and understand multiple data types such as text, images, audio, and video within a single model. --- ## How AI Models Are Trained 1. Data collection from structured or unstructured sources 2. Data cleaning and preprocessing 3. Model training using machine learning algorithms 4. Evaluation and optimization for accuracy 5. Deployment into real-world applications Modern approaches like transfer learning, fine-tuning, and LoRA help train models faster and more efficiently. --- ## Popular Use Cases of AI Models - AI chatbots and virtual assistants - Content creation and writing tools - Image and video generation platforms - Recommendation and personalization systems - Fraud detection and predictive analytics - Autonomous AI agents and automation tools - Healthcare diagnostics and research --- ## Benefits of AI Models - Automate complex processes - Improve decision-making accuracy - Scale operations efficiently - Enable personalization at scale - Reduce manual workload and costs --- ## Who Uses AI Models? - Businesses and enterprises - Developers and AI engineers - Data scientists and researchers - Startups and SaaS platforms - Content creators and marketers - Educational institutions --- ## Frequently Asked Questions (FAQ) ### What is an AI model? An AI model is a trained system that learns from data to make predictions, decisions, or generate content automatically. --- ### What is the difference between an AI model and an algorithm? An algorithm defines the learning process, while an AI model is the result produced after training the algorithm on data. --- ### Can AI models be reused? Yes. Pretrained AI models can be reused and adapted through fine-tuning for different tasks. --- ### Do AI models always need large datasets? No. With transfer learning, models can perform well using smaller datasets. --- ### Are AI models used in daily applications? Yes. AI models are widely used in search engines, social media, voice assistants, recommendation systems, and productivity tools. AI Model, Artificial Intelligence Model, Machine Learning Model, Deep Learning Model, Generative AI Model, AI Training, AI Algorithms